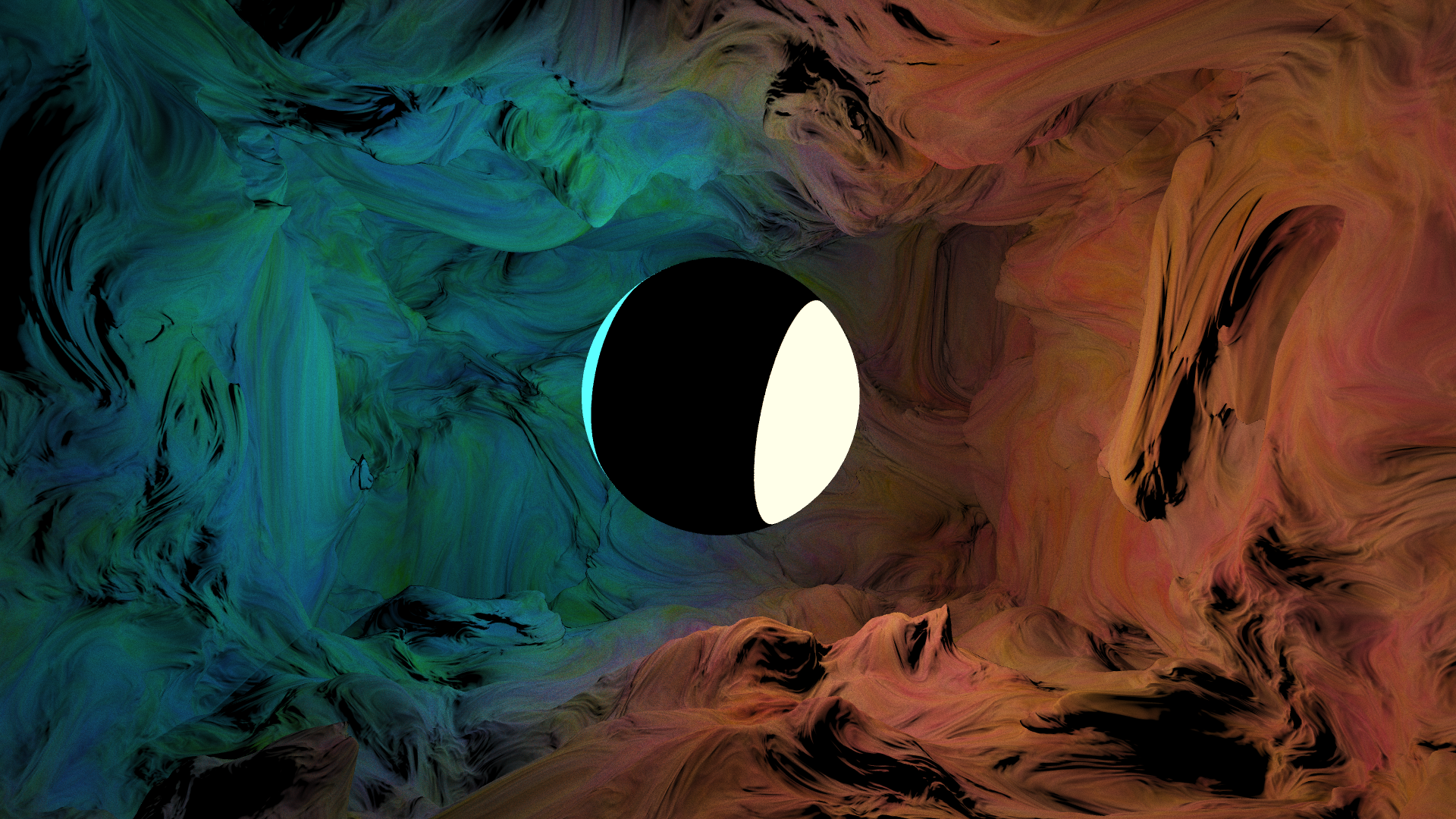

2kb of GLSL shader code released at TokyoDemoFest 2021

[pouët]

In this entry demonstrates a way to approximate the lighting you get from a path tracer in a way that's fast enough to run in real-time while still using SDF ray marching to render the scene.

A typical path tracer with next event estimation

You can think of a path tracer as being a system that computes how bright a given point in the scene is. It starts by shooting a ray from the camera into the scene. At the intersection point, it needs to estimate how much light is emitted at that point, since that value directly affects how bright a specific pixel in the final image should be. We will consider a path tracer that has two estimators for this value, a BRDF sampling and NEE.

In BRDF (bidirectional reflectance distribution function) sampling, the renderer picks a random direction into which the incoming ray is likely to be reflected, intersects that ray with the scene and computes the brightness of the new intersection point recursively. This process typically repeats until a light source is hit or a maximum depth is reached.

In NEE (next event estimation), the renderer knows the position of every light source in the scene and uses it to sample the lights directly. This means from the intersection point, it picks a light source, checks if the light source is visible from the intersection point, and if so, returns the corresponding contribution.

Once both samples from the estimators are computed, they are combined using multiple importance sampling (MIS).

What the shader does instead

This entry uses similar ideas as described above, but the scene has been carefully designed to allow for large parts of the path tracer to be optimized away.

The scene has exactly one light source positioned at the origin, which makes NEE easy to implement with little code. Additionally, the dripstone cave around the sphere is (mostly) convex, meaning the light source is visible from every point on its surface, so the visibility check can be omitted. Finally, since the walls of the cave are relatively dark, the contribution we get from the BRDF estimator turns out to be so small that we can remove it entirely.

Now that we have removed half the path tracer, this is what the renderer actually does:

- Intersect the camera ray with the scene (using sphere tracing)

- If we hit the sphere, return the emission texture for the intersection point.

- Otherwise, repeat the following 64 times:

- Pick a random point of the sphere facing the intersection point.

- Compute the emission at that point by looking up the emission texture.

- Weight the sample using the surface normal and wall color.

- Add the contribution to the accumulator.

The result is an intro that looks like it's path traced, but in reality only computes one ray intersection per pixel per frame and runs smoothly in real-time.

Conclusion

In retrospect, I kinda wish I made a 4k intro out of this, maybe something similar to Think Outside the Box, rather than releasing it as a shader without music, but this entry was very well received as-is. It ended up winning 2nd place in the compo and the organizers sent a trophy halfway around the world for my efforts.